For the impatient: the new improved algorithm described below is enabled by default and requires no changes to scripts or configuration files. However, the old algorithm is still available, and can be used by setting COM.OLDALG=1 in the configuration file.

For extended sources, MAKEMAP usually uses the mean normalised change in map pixel values between iterations as a measure of convergence. This value drops rapidly over the first few iterations, and eventually levels out, typically somewhere between 0.01 and 0.05. However, if MAKEMAP is allowed to continue iterating beyond this point, the mean normalised change between iterations often starts to increase and decrease by large amounts in an erratic manner. Examining the maps shows that these changes reflect the creation of nasty artifacts in the map, which often take the form of individual bolometer tracks. As an example, Dave Nutter saw the following variations in mean normalised change with iterations for one particular observation:

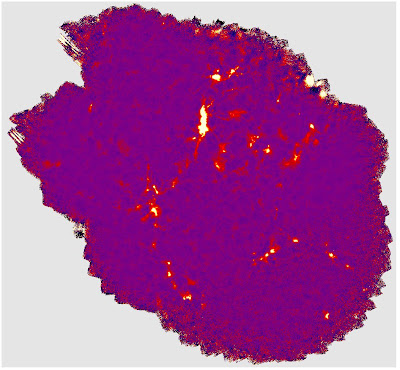

A relatively smooth decrease until about iteration 50 and then madness. For illustration the following shows the map after 50 iterations (left) and 200 iterations (right). Notice the visible streaks that have appeared by iteration 200.

The cause of this wild behaviour seems to be the way the common-mode signal is estimated and used. On each iteration, the common mode value at each time slice is taken to be the mean of the bolometer values at that time slice, averaged over all remaining bolometers (i.e. bolometers that have not previously been flagged as unusable for one reason or another). Each bolometer time series is then split into blocks of 30 seconds and compared to the corresponding block of the common-mode signal. This comparison takes the form of a least squares linear fit between the bolometer value and the common-mode value, and generates a gain, offset and correlation coefficient for each bolometer block. Bolometer blocks that look "unusual" - either because they have a low correlation coefficient (i.e. do not look like the common mode) or an unusually low or high gain - are flagged, and all the bolometer values within such blocks are rejected from all further processing. So on the next iteration, the common mode within each block is estimated from only the subset of bolometers that passed this check on all previous iterations. With the passing of iterations, more and more bolometer blocks are rejected, often resulting in the common mode in a block being estimated from a completely different subset of bolometers to those of the neighbouring blocks. This produces discontinuities in the common-mode signal at the 30 second block boundaries, which get larger as more iterations are performed. When the common-mode is subtracted from the data, these discontinuities are transferred into the residuals, which are then filtered within the FLT model. The sharp edges at the discontinuities cause the FFT filtering to introduce ringing (strong oscillations on the scale length of the filter that extend over long times). The following plot shows an example of these discontinuities in the common-mode estimate:

The horizontal axis is sample index. The red curve is the common mode at iteration 85 and the white curve is the common mode at iteration 99. Due to down-sampling, 30 seconds corresponds to 2400 samples, and discontinuities at intervals of 2400 samples are clearly visible. In addition, extensive oscillations are visible, particularly between samples 48000 and 50000.

In order to avoid this problem, changes to the way the common-mode is estimated and used have been made. The heart of the change is that bolometer blocks that are flagged as unusual are no longer excluded from the estimation of the common-mode on the next iteration - all such flags are cleared before the new COM estimate is made. So the common mode is always based on data from all bolometers, thus avoiding the discontinuities at the block boundaries. Other more minor changes in the code include:

- The common-mode value at each time slice can be estimated using a sigma-clipped mean, rather than a simple mean. The new configuration parameters COM.NITER (the number of iterations) and COM.NSIGMA (the number of standard deviations at which to clip on each iteration) control this algorithm. In practice, sigma-clipping does not seem to add much, and may slow down convergence. At the moment, both of these parameters default to 3. Changing COM.NITER to 1 results in a single simple mean being calculated with no clipping. This may become the default in the near future.

- No attempts are now made to refine the common mode. Previously, once the original COM signal was estimated and unusual bolo-blocks were flagged, a refined COM signal was formed by excluding the flagged bolo-blocks. Further unusual bolo-blocks were then flagged by comparing them with the refined COM signal. This refinement process was repeated until no further bolo-blocks were rejected. In the new code, no attempts are made to refine the original COM signal resulting in the new algorithm being significantly faster than the old algorithm.

Note, the new algorithm still uses COM.GAIN_BOX to define the size of the blocks in which the gain and offset of each bolometer is estimated. Also, "unusual" blocks are still flagged in the same way and excluded from the subsequent FLT model and map .

The new algorithm seems effective at overcoming the problem of divergence illustrated at the start of this post. For instance, with the new code the variation of mean normalised change between iterations for the same data looks as follows:

Note that:

- The wild variations above iteration 80 have gone.

- The curve drops somewhat faster at the start (i.e. fewer iterations are needed to achieve a given maptol).

- Each estimation of the common-mode signal is faster than before due to the lack of the refining process.

- Far fewer samples are rejected from the final map ( 2.38 % of the data is now flagged by the COM model, as opposed to 17.37 % previously).

- The resulting maps at iteration 50 and 200 are shown below. There is very little difference between them, and they both look visually like the iteration 50 map from the old algorithm shown above.